Artificial Intelligence and Healthcare in Scotland

This briefing explores the use of artificial intelligence (AI) in Scottish healthcare. The briefing explains what AI is, and provides an overview of existing policy, strategies and key stakeholders. It describes the current uses of AI in healthcare in Scotland, with examples. It also discusses the potential impacts of AI on healthcare, as well as some emerging challenges.

Executive summary

While there is no universally accepted definition of artificial intelligence (AI), Scotland's AI Strategy defines AI as "Technologies used to allow computers to perform tasks that would otherwise require human intelligence, such as visual perception, speech recognition, and language translation".

The Scottish Government aims to promote the use of "trustworthy, ethical and inclusive AI" across health and social care, as outlined in Scotland's AI Strategy, the Digital Health and Care Strategy 2021, and Scotland's Data Strategy for Health and Social Care 2023.

Currently, the Scottish Government aims to use existing leadership structures to promote the use of AI, rather than appointing an NHS AI champion. The Scottish Government is in the process of developing guidance regarding the adoption of AI for health and social care organisations.

Regulation of medical devices, including AI and software as medical devices, and data protection are reserved matters, so decisions on these matters are taken by the UK Parliament. UK medical device regulation is currently in a transitional phase following the UK's exit from the European Union (EU), but devices meeting EU standards are still accepted into the UK market at the time of writing.

The Scottish health AI sector involves many stakeholders. In the Scottish Government, policy on AI and healthcare is led by the Digital Health and Care Division. In NHS Scotland, most AI projects take place in territorial NHS Boards, while some national Boards, including but not limited to NHS Golden Jubilee and Health Improvement Scotland also play a role. AI development is often driven by the NHS Regional Innovation Hubs, in cooperation with academic and industry partners.

A small number of AI applications are already used in clinical practice in NHS Scotland. These include tools related to paediatric bone growth, the delivery of radiotherapy and speech recognition. Some of the newest medical imaging machines also have in-built AI features.

Many AI tools are currently being developed and tested in Scotland. These include AI tools that read medical images (such as breast screening x-rays), automate administrative tasks, help optimise resources, aim to predict and prevent diseases, and support self-management of long-term conditions.

The impact that AI will have on the cost of healthcare is uncertain. While AI has the potential to cut workloads and improve efficiency, new technology does not automatically lead to lower total costs, because it can result in higher demand for services. Sometimes new technologies fail to deliver desired benefits.

There is generally a low awareness of AI in healthcare in the UK public, but people respond positively when prompted to consider specific descriptions of AI applications. Healthcare professionals also seem to take a broadly positive view of AI and other digital technologies that improve productivity.

Both the public and healthcare professionals express a preference for having a 'human in the loop', suggesting that AI should assist rather than completely replace human clinicians. Current AI technologies also rely on human involvement for safety.

Challenges related to the growing use of AI in healthcare include those around safety, generalisability, explainability, and current ambiguity around legal liability. Given that AI tools require large amounts of data to work accurately, data protection is also a significant challenge.

Algorithmic bias refers to systemic and repeatable features of AI systems that create unfair outcomes for specific individuals or groups. It can be caused by imbalanced training data, as well as decisions made during data collection and algorithmic design.

There are also some challenges relating to adoption, such as the possibility that AI tools trained with data from elsewhere must be recalibrated for the local population before they can be used in a specific location in Scotland.

Introduction

This briefing explores the use of artificial intelligence (AI) in healthcare in Scotland. It begins by explaining what AI is and providing an overview of existing policy, strategies and key stakeholders in the area. It then describes the current uses of AI in healthcare in Scotland, highlighting multiple current examples. It also discusses the potential impacts of AI on healthcare, as well as some emerging challenges.

This briefing does not cover social care, drug development or AI tools designed primarily for medical research. Robotics, a field connected to but separate from AI, also falls outside of the scope of this briefing.

Artificial intelligence

There is no universally agreed definition of artificial intelligence (AI).1Scotland's Artificial Intelligence Strategy defines AI as follows:

Technologies used to allow computers to perform tasks that would otherwise require human intelligence, such as visual perception, speech recognition, and language translation.

Scottish Government. (2021). Scotland’s Artificial Intelligence Strategy: Trustworthy, Ethical and Inclusive. Retrieved from https://www.gov.scot/binaries/content/documents/govscot/publications/strategy-plan/2021/03/scotlands-ai-strategy-trustworthy-ethical-inclusive/documents/scotlands-artificial-intelligence-strategy-trustworthy-ethical-inclusive/scotlands-artificial-intelligence-strategy-trustworthy-ethical-inclusive/govscot%3Adocument/scotlands-artificial-intelligence-strategy-trustworthy-ethical-inclusive.pdf [accessed 24 April 2024]

This briefing uses the same definition.i

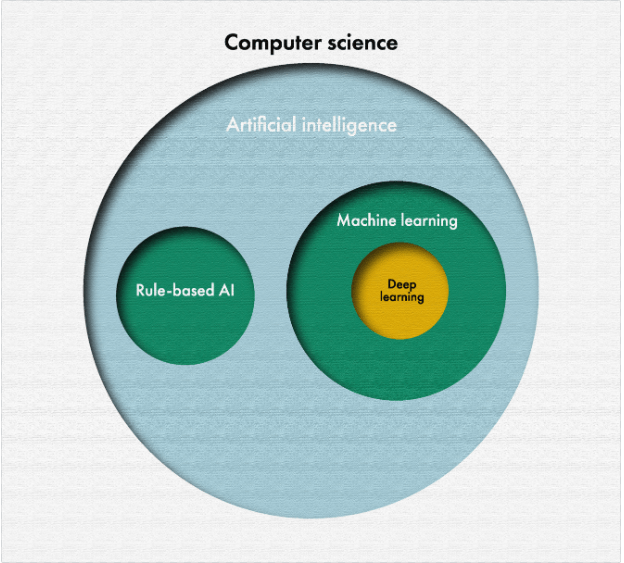

AI is based on algorithms. Algorithms are sets of instructions used to perform tasks, such as analysis or calculations. Until the 1980s, most AI algorithms relied on explicitly programmed rules.3 For example, such rules might suggest a potential diagnosis or treatment based on given symptoms.4 This kind of AI is often called rule-based or logic-based AI.

Rule-based AI can be contrasted with machine learning, which is behind much of the recent progress in AI. Machine learning algorithms learn by finding patterns in the training data, and then coming up with rules to describe the findings. This allows AI systems to perform tasks, such as image recognition, where programming explicit rules would be too difficult or time-consuming.5

The term 'deep learning' is commonly used to refer to multiple advanced machine learning techniques.5 This and many other AI terms are further explained in a recently published, short blog by SPICe. The UK Parliamentary Office of Science and Technology (POST) has also produced a longer technical explainer on AI.

Policy background

This section describes the current policy and regulatory background for the development, adoption and use of AI in healthcare in Scotland.

Scottish policy and strategies

Scotland's Artificial Intelligence Strategy, published in 2021, sets out the following vision:

Scotland will become a leader in the development and use of trustworthy, ethical and inclusive AI.

Scottish Government. (2021). Scotland’s Artificial Intelligence Strategy: Trustworthy, Ethical and Inclusive. Retrieved from https://www.gov.scot/binaries/content/documents/govscot/publications/strategy-plan/2021/03/scotlands-ai-strategy-trustworthy-ethical-inclusive/documents/scotlands-artificial-intelligence-strategy-trustworthy-ethical-inclusive/scotlands-artificial-intelligence-strategy-trustworthy-ethical-inclusive/govscot%3Adocument/scotlands-artificial-intelligence-strategy-trustworthy-ethical-inclusive.pdf [accessed 24 April 2024]

This vision aims to guide the use and development of AI in Scotland, including in healthcare.

The strategy noted that Scotland has potential to become a "go-to location" for the AI healthcare market. To this end, the strategy pledged to deliver a plan for a Life Science AI Cluster to accelerate AI development for healthcare.1 This also featured in the 2021-22 Programme for Government, which stated that the Scottish Government "will provide £20 million to develop an AI Hub for Life Science, NHS and Social Care".3

However, the Scottish Government has since re-prioritised resources elsewhere due to fiscal pressures. Instead, its current approach is to use the Data Strategy for Health and Social Care as the primary vehicle for accelerating AI development. (Scottish Government personal correspondence).

The Scottish Government also aims to use existing leadership structures to support the use of AI, rather than creating new ones. In response to written question S6W-22009 in October 2023, the then Cabinet Secretary for NHS Recovery, Health and Social Care, Michael Matheson MSP said:

Our intention therefore is to empower and support NHS leadership in the use of these new technologies, to help build and share a wider knowledge base, rather than appointing a single AI champion.

Scottish Parliament. (2023, October). Answer to question S6W-22009. Retrieved from https://www.parliament.scot/chamber-and-committees/questions-and-answers/question?ref=S6W-22009 [accessed 3 May 2024]

This approach differs from the one taken in England, where the NHS AI Lab is tasked with accelerating the adoption of AI in health and social care.

Issues relating to AI and healthcare have also featured in Scotland's 2021 Digital Health and Care Strategy; the associated Digital Health and Care Strategy Delivery Plan for 2022-23, 2023-24 and 2024-25; the NHS Recovery Plan 2021-26; Scotland's Data Strategy for Health and Social Care 2023; and the associated 2024 Data Strategy Update.

The 2021 Digital Health and Care Strategy states the Scottish Government's aim to equip health and care staff with the ability to use AI tools and to positively influence the development and eventual adoption of new technologies.5i The NHS Recovery Plan 2021-26 states that the Centre for Sustainable Delivery will work together with NHS Boards to accelerate the adoption of AI.6

The 2023 Data Strategy for Health and Social Care sets out ambitions for how data will be stored, shared, accessed, and used in health and social care in Scotland.ii It highlights the importance of a wide variety of factors, including:

data ethics

appropriate and timely access to data

developing better data infrastructure and improving interoperability

supporting research and innovation.7

The Data Strategy, overseen by the Health and Social Care Data Board, captures most of the more detailed work currently being undertaken by the Scottish Government in relation to AI and healthcare (Scottish Government personal correspondence).

Both the 2022-23 and 2023-24 Delivery Plans for the Digital Health and Care Strategy mention the Scottish Government's aim to deliver a national approach to the consideration of adoption of AI-based tools.89 In response to written question S6W-18818 in June 2023, the then Cabinet Secretary for NHS Recovery, Health and Social Care, Michael Matheson MSP said:

The recently released Data Strategy for Health and Social Care [2023] details a set of ethical principles for the use of data across our health and care sector. These principles are applicable to the use of AI as well as the broader use of data.

Furthermore, the Data Strategy sets out our intent to work towards the safe adoption of AI tools, working to international best practice. We have also committed to developing a policy framework for adoption of AI across health and [care] by Spring 2024.

Scottish Parliament. (2023, June 20). Answer to question S6W-18818. Retrieved from https://www.parliament.scot/chamber-and-committees/questions-and-answers/question?ref=S6W-18818 [accessed 26 April 2024]

At the time of writing, the policy framework for AI adoption mentioned in the answer is still being developed. The Data Strategy 2024 Update listed this as a priority for 2024-25.11 It is anticipated that this framework will be published in late 2024 (Scottish Government personal correspondence).

Wider UK and EU context

Policy on AI and healthcare in Scotland is affected by developments in a wider UK context. Powers related to health and economic development are devolved to the Scottish Parliament.1 Therefore, many decisions regarding the adoption of AI tools into the health service can be made in Scotland. For example, in May 2024, the Scottish Government announced a £1.2 million investment to roll out new theatre-scheduling software nationally. It is also possible for the Scottish Government to have its own initiatives to promote innovation in AI (such as the Scottish AI Alliance).

However, data protection and the regulation of medical devices are areas reserved to the UK Parliament.2 This includes the regulation of medical devices (including software as a medical device) by the MHRA (Medicines and Healthcare products Regulatory Agency) and General Data Protection Regulations enforced by the ICO (Information Commissioner's Office). The ICO also publishes relevant guidance, such as the Guidance on AI and Data Protection and the AI and Data Protection Risk Toolkit. Any development or use of AI tools in Scotland, whether in the public or private sector, must follow these UK-wide regulations.

At the time of writing, UK medical device regulation is in a transitional phase following the UK's exit from the European Union (EU). It remains subject to the Medical Devices Regulations 2002 (as amended), which transposed relevant EU Directives into UK law. The new UK regulatory regime was initially introduced through the Medicines and Medical Devices Act 2021, with the intention that secondary legislation would be laid using these powers on 1st July 2023.3However, the UK Government has postponed this deadline and extended the application of previous EU standards. Under the current approach, medical devices are accepted into the UK market based on CE marking (the EU's regulatory mark) until 2028 or 2030 depending on the specific type of the device.4

As a part of the development of the UK's own medical device regulations, the MHRA is currently conducting the Software and AI as a Medical Device Change Programme. This programme aims to:

reform the existing regulations relating to software (including AI) as a medical device, and

consider challenges that AI poses over and above traditional software.5

Therefore, it is anticipated that the regulatory environment for healthcare AI technologies will change in the coming years.

As the UK and the EU move forward with different regulatory regimes for medical devices, there could be potential for divergence between the UK and EU. The Scottish Government's policy is that Scottish laws should align and keep pace with EU law in devolved areas, where appropriate.6 However, as medical devices regulation is a reserved matter, it is not affected by this policy.

The EU has recently approved the new Artificial Intelligence Act 2024, which will control the use of AI in general, not just in healthcare.7 The Act will create some further obligations to providers of healthcare AI tools classified as 'high-risk' systems above and beyond medical devices regulation.8 The UK Government, on the other hand, is currently planning to adopt a legislatively lighter, 'pro-innovation approach' to AI policy.9

Key stakeholders

The research, testing and adoption of AI in healthcare involves many organisations, including the Scottish Government , NHS boards, other public bodies, universities and private companies. This section describes the key actors in the Scottish AI and healthcare sector.

Scottish Government and related organisations

In the Scottish Government, national AI policy is led by the Digital Directorate. Together with the Data Lab, based in the University of Edinburgh, it supports the Scottish AI Alliance, which prepared Scotland's AI Strategy, and is also tasked with delivering it.1

Wider initiatives led by the AI Alliance include the development the Scottish AI Register and the Scottish AI Playbook for the Scottish Government, Executive Agencies and Non-Departmental Government Bodies. These do not yet extend to the whole public sector, including health or social care.

Policy on AI in relation to healthcare is led by the Digital Health and Care Division within Director General Health & Social Care. Much of the policy in the area, including the Digital Health and Care and Data Strategies, is developed together with COSLA (the Convention of Scottish Local Authorities).

NHS Research Scotland is a partnership between the Chief Scientist Officer of the Scottish Government and NHS Boards. It supports clinical research and maintains research infrastructure, such as the Data Safe Havens.2 The Safe Havens provide secure access to anonymised health records to academic and industry partners for research and innovation purposes, while maintaining NHS ownership of the data.3 This data is regularly used by the NHS Regional Innovation Hubs.

Research Data Scotland is a charity established and funded by the Scottish Government that helps with coordinating the Safe Havens and facilitating access for researchers.4

NHS Scotland

NHS Scotland has three regional innovation hubs, funded by the Chief Scientist Office (The West of Scotland Innovation Hub, The North of Scotland Innovation Hub, and Health Innovation South East Scotland). They cover all 14 territorial NHS Boards, aiming to foster collaboration between the NHS, academia and industry.1 The hubs have a leading role in driving healthcare AI innovation in Scotland (Scottish Government personal correspondence).i

In addition to playing a role in the development phase, the 14 territorial NHS Boards are also ultimately responsible for the deployment of AI technologies in their own areas. This is similar to other new medical technologies.

Some special NHS Boards also have relevant functions. NHS Golden Jubilee, which includes the Centre for Sustainable Delivery (CfSD), is involved in multiple AI pilots.3 The CfSD also runs the Accelerated National Innovation Adoption (ANIA) Pathway, which aims to fast-track the adoption of new, proven technologies across NHS Scotland.4

Health Improvement Scotland (HIS) runs the Scottish Health Technology Group (SHTG), which provides advice and recommendations on new technologies, including but not limited to AI.5 In some cases, the Accelerated National Innovation Adoption (ANIA) Pathway refers technologies for the SHTG to evaluate.6

NHS Education for Scotland (NES) delivers training for healthcare professionals. Some of the currently available learning materials on AI can be publicly accessed via NES' learning platform, Turas Learn.

NHS National Services Scotland (NSS) coordinates Scotland's national screening programs together with territorial NHS boards.7 Many of these programmes, for example diabetic retinopathy screening, have the potential to be used with AI.8 The Scottish Ambulance Service (SAS) is aiming to become a test bed for technologies including AI,9 and it is currently trialling the use of AI in control centres to identify critically ill patients.10

Universities and industry

In addition to NHS Scotland, the development and testing of AI technologies in healthcare involves universities and industry partners.

In Scotland, the iCAIRD (Industrial Centre for AI Research in Digital Diagnostics) project was a significant research initiative on AI in healthcare from 2019 to 2023.1 It brought together the Universities of Aberdeen, Edinburgh, Glasgow and St Andrews, as well as NHS Scotland and private company partners. Founding companies of the project included Bering Ltd, Canon Medical Research Europe Ltd, Cytosystems Ltd, DeepCognito Ltd, Glencoe Software, Intersystems, and Kheiron Medical Technology.2

The iCAIRD project received over £15 million of initial funding from UK Research and Innovation (UKRI) and private companies.2 Many AI collaborations that began during the project are still active.

Some of the Data Safe Havens are hosted by academic partners. For example, the Grampian Data Safe Haven, established with NHS Grampian, is situated at the University of Aberdeen.4

Rest of the UK

The regulation of medical devices and data protection are matters reserved to the UK Parliament. In the UK, medical devices are regulated by the Medical & Healthcare products Regulatory Authority (MHRA).1 The Information Commissioner's Office (ICO) is responsible for promoting and enforcing data protection legislation. This includes the UK General Data Protection Regulation, Data Protection Act 2018, Environmental Information Regulations 2004 and the Privacy and Electronic Communications Regulations 2003.2

In some cases, AI innovation projects in the Scottish healthcare sector have received funding from NHS England.3 This includes the The Artificial Intelligence in Health and Care Award based in England's NHS AI Lab.4 Currently, Scotland does not have a funding initiative like this in place. Instead, the Scottish Government's approach is to consider any new technologies on an individual basis, regardless of whether they include an AI element (Scottish Government personal correspondence).

The use of artificial intelligence in Scottish healthcare

This section describes some of the current and the potential near-future uses of AI in healthcare, with a focus on Scotland. It does not cover social care, drug development or AI tools designed primarily for medical research.

Current uses

There is a small number of AI tools already being used in clinical practice in Scotland. This section outlines most of these applications, but may not provide a comprehensive list.

BoneXpert is a method for automatically determining a child's bone age from radiographs of the hand with AI. This helps clinicians spot potential growth disorders and other medical conditions.1 BoneXpert is used widely in the UK, including NHS Greater Glasgow and Clyde and the Royal Hospital for Sick Children in Edinburgh.2

Ethos is a system that uses AI to automatically target and adapt the delivery of radiotherapy in cancer treatment. When a patient has cancer, the tumour and the surrounding tissue typically change as the treatment and/or the disease progress. Traditionally, this has required a lot of time from clinicians to draw up and continuously adjust treatment plans. The Ethos system uses AI to allow clinicians to make these changes more quickly.3 Ethos is currently used at the Beatson West of Scotland Cancer Centre.4

Some of the newest medical imaging devices utilise AI as an in-built feature. For example, the CT (computerised tomography) scanner at the Golden Jubilee Hospital in Clydebank uses AI to achieve clearer images in less time than previous models.5

Speech recognition software has improved significantly with recent advancements in machine learning. Many healthcare professionals now use it to speed up repetitive tasks around recording and checking clinical notes and letters.67

Knowledge-based clinical decision systems, which some people consider as rule-based AI, are used around the world.89 In Scotland, the Right Decision Service offers a variety of digital tools for patients and clinicians to make evidence-based decisions.10 However, most people working in the area would generally not consider anything currently in the Right Decision Service as AI, viewing it as a traditional digital tool instead. (Scottish Government personal correspondence)

Pilots

There are numerous AI tools that have not yet been widely adopted, but are being tested in clinical settings. These include tools for interpretation of medical images, administrative tasks, and prevention. Table 1 includes examples of current AI projects in these domains.

| Project name | Aim | AI tool | Partners | Funding | Status |

|---|---|---|---|---|---|

| GEMINI: Grampian’s Evaluation of Mia in an Innovative National breast screening Initiative12 | Making breast screening more accurate, reducing workload,potentially replacing one of two readers | MIA, AI tool that reads x-ray images | NHS Grampian, University of Aberdeen, Kheiron Medical Technologies | NHS England AI in Health and Care Award | Analysis ongoing with final results expected in 2024; BBC reported 11 additional women diagnosed with cancer |

| RADICAL: Radiograph Accelerated Detection and Identification of Cancer of the Lung34 | Triaging patients based on chest x-rays, focusing on lung cancer | qXR, AI tool that reads x-ray images | NHS GGC, University of Glasgow, Qure.ai | Qure.ai (50%), Scottish Government via CfSD bid (50%) | Trials ongoing in NHS GGC |

| Artificial Intelligence for Stroke Imagingi | Identifying acute stroke patients suitable for intravenous thrombolysis and mechanical thrombectomy | Three AI tools that read CT images under evaluation | NHS Tayside, NHS Grampian, NHS Lothian, NHS Greater Glasgow & Clyde | Scottish Government, NHS Scotland | Three potential solutions have been piloted and procurement exercise now being undertaken |

| Dynamic Scot567 | Preventing emergency admissions, supporting self-management for people with COPD (chronic obstructive pulmonary disease) | Dynamic-AI, predictive AI model | NHS GGC, University of Glasgow, Storm ID, Lenus Health | NHS England AI in Health and Care Award | Trial running in NHS GGC until January 2025 |

| New technology-enabled theatre-scheduling system8910 | Cutting waiting lists by making operating theatre scheduling more efficient | Infix, scheduling software that uses machine learning | NHS Lothian, SHTG (evaluation) | Software initially bought by NHS Lothian from the industry partner | In May 2024 Scottish Government announced £1.2m for national roll-out |

Medical imaging

AI tools can be used to help clinicians interpret images in various fields of medicine, including radiology, pathology, cardiology, dermatology, and ophthalmology. Below is a list of examples.

Radiology: AI can detect and categorise tumours,12 bone fractures (which can be signs of osteoporosis),3 infections (like pneumonia),45 critical head injuries,6 and stroke7 in x-ray, CT and MRI images

Pathology: AI can distinguish between benign and potentially malignant tumours in digital images of tissue samples8

Cardiology: AI can interpret echocardiograms (ultrasoundi images of the heart) to speed up the diagnosis of heart failure9

Dermatology: AI can help diagnose skin cancer from images of skin abnormalities10

Ophthalmology: AI can diagnose diseases like age-related macular degeneration11 and diabetic retinopathy12 from images of the retina (back of the eye)

Administrative tasks and resource optimisation

AI tools have the potential to cut the time required for various administrative tasks and help healthcare providers optimise the use of resources. Below is a list of examples:

Appointment scheduling: AI can help predict likely missed appointments, schedule them in a way that makes the patient more likely to attend, and make back-up bookings to avoid loss of clinical time1

Resource management and optimisation: for example, AI tools can automate and improve the efficiency of operating theatre scheduling2 and staff scheduling3

Hospital management: AI can be used to predict bed demand and find optimal discharge dates to avoid unnecessary discharge delays4

Preventative interventions and management of chronic conditions

AI can harness patient data to help prevent illness, predict risks and manage chronic health conditions. Examples include:

Prevention: AI could be used to identify patients at a high risk of hospital admission, so that early interventions can be offered to prevent A&E visits and admissions1

Management of chronic conditions: for example, predictive AI could help with the management of COPD (chronic obstructive pulmonary disease) by flagging patients at a higher risk of adverse events, allowing proactive interventions2

Self-management: AI could help patients independently manage certain conditions or improve lifestyles, for example with chatbots3

Potential future uses

In addition to live pilots, there is a lot of early-stage research aiming to discover further uses for AI in healthcare. Examples include:

Diabetes: AI could be used to integrate automatic glucose monitoring with insulin pumps to deliver an 'artificial pancreas', freeing type 1 diabetics from having to manually dose insulin1

Mental health: AI could use large amounts of data, for example from people's smart phone usage, to diagnose mental health problems, and AI-powered chatbots could even offer therapy2

Personalised medicine: AI could help clinicians optimise the type, dose and timing of medicines for the patient's individual health profile to achieve better outcomes with diseases like cancer3

While there could be many further beneficial uses of AI in healthcare, people working around this area also note that it is important to design solutions that centre on specific problems and the people affected, rather than the other way around. The principle of exploring and defining the problem before devising a solution is a key point in the Scottish Approach to Service Design.4

Potential impact on healthcare

AI could have a profound impact on the cost of healthcare, patients and healthcare professionals. This section discusses some of the potential scenarios.

Cost of healthcare

AI tools have the potential to improve productivity and save resources in healthcare by freeing up staff time, diagnosing diseases earlier, and preventing them. For example, AI tools that read medical images could allow images to be reviewed by just one human reader, instead of the current two reader standard. Predictive AI could allow early intervention and help patients self-manage or remotely manage their conditions, decreasing hospital admissions. Automating administrative tasks could give clinicians more time to focus on care.

There is currently little research on the potential impact of AI on healthcare costs. A 2018 report published by the Institute for Public Policy Research, a think tank, estimated that realising the maximal potential of automation could save NHS England £12.5 billion annually. However, this figure included not just AI, but multiple further advances in automation, including robotics.1

Many of the promising results relating to AI have not yet been widely replicated in real-life clinical settings.23 Emerging evidence suggests that AI tools must be locally calibrated both before and some time after adoption, and their performance must be monitored.4 These factors could create some additional costs that are difficult to estimate.

Historically, the introduction of new technologies does not automatically decrease healthcare costs. In some cases, projects aimed at digitizing healthcare have failed, the UK's National Programme for IT in 2002-2011 being an often cited example.5 It has been reported that this cost around £10 billion with few benefits realised.6

Even when new technologies are very successful, they can lead to increased total healthcare costs by leading to higher demand and/or better outcomes.7 To give a concrete but hypothetical example: if AI cuts the cost of cancer screenings, there might be higher demand for screening which might result in more cancers being found which require treatment. If the treatment is successful, the patients are likely to use further healthcare services in the future.

Impact on patients

Many of the advantages of AI that could lead to savings in healthcare costs also offer potential benefits to patients. This includes earlier and more accurate diagnosis, less serious complications and hospital admissions, and better opportunities for self-management.

A 2023 survey commissioned by the British Standards Institution found that just over half (54%) of the UK respondents were positive about AI being used for diagnostic purposes.i Just under half of the respondents supported the use of AI to reduce waiting times (49%) and to meet staffing demands (48%). The same study also found a relatively strong desire for patients being informed when AI is used (65%) and for international guidelines ensuring AI is used safely (61%).1

A survey by NHS England of members of the public found a relatively low level of awareness of how AI can be used in health and social care, with 48% of respondents having heard very little or nothing about it. However, when presented with 14 specific examples of how AI could be used, at least 56% of the respondents said they would feel 'very' or 'relatively' comfortable across all the examples. The same research found three main themes that promote patient trust:

'Having a human in the loop', so that professionals make the ultimate decisions, while using AI as a supporting tool

Being offered 'open and honest information' on the regulatory process and data protection around AI tools

Having 'proof of the impact' to show the benefits of AI2

In addition to questions of trust and awareness, some people are concerned that the use of AI in some areas of healthcare, such as triage or personal care, could feel inhumane.3 On the other hand, there are also some cases where patients might find it easier to interact with an AI system, for example when it comes to sexual health.4

Impact on healthcare staff

At the time of writing, there are no comprehensive surveys on attitudes towards AI among UK healthcare professionals, but there are some field-specific surveys and qualitative studies. A recent academic survey of people who work in breast screening found that most respondents supported replacing one of the two human readers (as is the standard) with AI but objected to replacing human readers completely.1 A qualitative study in England found a broadly positive perception of AI among healthcare staff, though some worries were raised in relation to additional workload, data protection, potential misdiagnosis and the prospect of removing humans from care.2

AI could result in the need for healthcare staff to learn new skills and adapt to new ways of working. A report by the NHS AI Lab and Healthcare Education in England concluded that developing healthcare worker's confidence in AI requires educational resources for all staff members at all points of their careers.3 Procurement practices may also need to adapt to AI, given how quickly these technologies and the guidance and regulation around them develops. (Scottish Government personal correspondence)

The need for healthcare staff to adapt to new technologies and digital tools, including but not limited to AI, is recognised in the National Workforce Strategy for Health and Social Care in Scotland. The Strategy states:

Workforce development in digital skills, leadership and capabilities across the whole health and care sector underpins the successful uptake and use of digital technologies. That is why we must continue to work with partners to ensure our entire workforce has the necessary skills and confidence to embrace the new ways of working that digital brings.

Scottish Government. (2022). National Workforce Strategy for Health and Social Care in Scotland. Retrieved from https://www.gov.scot/binaries/content/documents/govscot/publications/strategy-plan/2022/03/national-workforce-strategy-health-social-care/documents/national-workforce-strategy-health-social-care-scotland/national-workforce-strategy-health-social-care-scotland/govscot%3Adocument/national-workforce-strategy-health-social-care-scotland.pdf [accessed 21 June 2024]

In Scotland, NHS Education for Scotland (NES) delivers training for healthcare professionals. Some of the currently available learning materials on AI can be publicly accessed via NES' learning platform, Turas Learn. NES runs the Digital Health and Care Leadership Programme, which aims to help participants develop the leadership skills needed to navigate digital solutions.

In 2023, NES also launched the Leading Digital Transformation in Health and Care for Scotland programme. This is a postgraduate course, designed to help leaders deliver digital transformation in health and care. It is delivered by the University of Edinburgh and funded by the Scottish Government.5

In a recent academic survey of UK radiology trainees, 98.7% of the respondents felt that AI should be taught as part of their training, but only one out of the 149 respondents said that their training programme included it. As the authors of the survey state, this implies that there is "strong support for further formal training" on AI.6

Potential challenges

This section discusses some of the potential challenges that healthcare AI may pose to patients, healthcare professionals, regulators and wider society.

Safety

Like all medical technologies, the use of AI involves considering questions relating to patient safety. AI tools, if designed or used poorly, could lead to harm by failing to diagnose health conditions correctly.1 However, AI could also improve patient safety, for example by reducing communication errors and improving its efficiency between clinicians.2

At the time of writing, the AI tools used and tested in the Scottish healthcare sector are generally designed to assist humans, rather than work independently. Therefore, the safe use of these tools still relies on human involvement. This idea of having a 'human in the loop' is also supported by clinicians and patients.

Inappropriate use of AI tools can also negatively affect their safety. In 2023, the World Health Organisation (WHO) urged caution to be exercised with AI large language models in the context of their "meteoric public diffusion and growing experimental use".3 There are media reports of lay people using ChatGPT to answer medical questions and even provide therapy, even though experts warn against this.45

Explainability

Another technical issue relating to AI, specifically machine learning, is the potential lack of explainability (or interpretability). This means that it is not always possible to fully explain how a complicated machine learning system has reached its decision. This is due to the nature of machine learning algorithms. Unlike rule-based AI, machine learning does not rely on explicitly programmed rules that would be easy for the developer to explain.1

In the context of healthcare, the exact importance of explainability is debated. Some worry that the lack of explainability can:

make it difficult to resolve disagreement between AI tools and human clinicians

disempower patients by making decisions about care harder to understand and challenge

raise legal and ethical problems with determining responsibility.2

Others point out that explainability sometimes comes at the cost of lower accuracy. They also note that there are many things in medicine that we routinely use, despite not fully understanding the underlying mechanism, because their safety and efficacy has been shown in practice.3

Generalisability and bias

The performance of AI tools depends on the data that they have been trained on. This means that even very good performance in one setting does not automatically generalise to other settings. If the patient population or the clinical circumstances that the AI is deployed in differ from the ones it was trained on, performance can be impaired.12

Algorithmic bias refers to systemic and repeatable features of AI systems that create unfair outcomes for specific individuals or groups, often amplifying existing inequality or discrimination. It can be caused by imbalanced or incomplete training data, as well as decisions made during data collection and algorithmic design.3 For example, an AI system designed for diagnosing skin cancer that is less accurate with dark-skinned patients as a result of being trained primarily with images from light-skinned patients exhibits algorithmic bias. Similar examples can be found for bias along gender, age, sexuality, and many other dimensions.4

Privacy and data protection

The large quantity of data needed to train AI, together with the fact that most AI development involves private companies, raises questions relating to data protection and privacy.

In 2017, the ICO found that the Royal Free Hospital, in London, had breached UK data protection law by giving patient data to the AI company DeepMind, for the purpose of testing a kidney failure detection app. The case has received public attention12 and been described as a 'cautionary tale' by academics.3 However, some people working around this topic argue that the central feature of this case was the failure to comply with data protection law, rather than the use of AI in itself.

The ICO has published two discussion papers outlining its thinking on big data, AI and data protection, in 2014 and 2017. In the 2017 paper, Big data, artificial intelligence, machine learning and data protection, a key point is that while AI technologies are new, the way that they need to use personal data is not. Therefore, the existing data protection legislation in the UK provides a strong framework for AI. One central feature of this is the principle of 'privacy by design' that developers of new technologies must follow as a legal requirement.4

In Scotland, the Safe Havens provide access to NHS health records to academic and industry partners for research and innovation purposes, when it is not practical to obtain consent from individual patients. Their operating principles are set out in the Safe Haven Charter. According to the Charter, the Safe Havens will protect patients' privacy and refuse to sell data to commercial organisations.5 The Safe Haven Charter is currently being refreshed by Research Data Scotland. (Scottish Government personal correspondence)

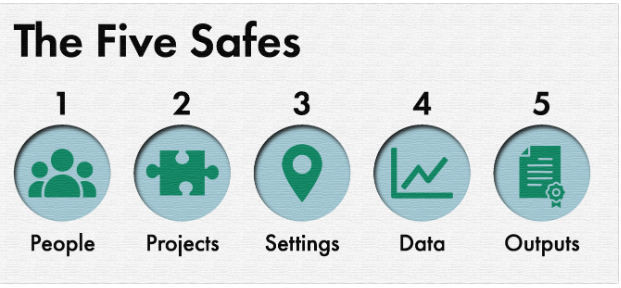

NHS Safe Havens, like all UK trusted research environments, follow the Five Safes framework. These were originally created by the Office for National Statistics in collaboration with other data providers in the 2010s to help keep data secure.6

The Five Safes are:

Safe People: researchers accessing data are subject to an appropriate accreditation process

Safe Projects: data must be used ethically and for the public benefit

Safe Settings: the physical spaces used to access data are secured and monitored

Safe Data: researchers only access the data necessary for their project

Safe Outputs: research outputs are checked to make sure individuals remain unidentified.7

There is some ongoing work in the Scottish public sector on improving data sharing and storage. The intention is to build AI systems that would rely on already available data in closed environments. This reduces the risk of data breaches. (Scottish Government personal correspondence)

Legal issues

Some healthcare professionals have expressed concern about the current lack of clarity on AI and legal liability. In principle, responsibility over harm caused to patients by AI tools could fall to the clinician using the tool, the deploying organisation, those who validated the technology or those who created it.1 What would happen in practice is unclear, because there is little to no established case law in the area.23 Commentators have suggested that the current legal regimes of clinical liability and product liability are inadequate for dealing with AI as a medical device.4

A 2022 report by the Regulatory Horizons Council identified multiple challenges relating to the regulation of AI as a medical device. These included many of the challenges already mentioned, including data protection, interpretability, generalisability and bias. Additionally, the report highlighted the following regulatory difficulties:

limits on regulatory capacity in a complex and quickly evolving area of technology

managing post-market evaluation in the context of frequent updates on the AI

regulating likely future AI tools that keep learning while being used, and thus may become a different product from the one that first received regulatory approval over time.5

Challenges with local adoption

Some challenges with AI arise specifically when trying to adopt new tools in local contexts. Due to the nature of the technology, AI tools often cannot be brought in from elsewhere and implemented directly. Instead, AI tools that are trained with data from other areas would need to be calibrated locally when deployed. For example, researchers in Aberdeen found that an AI breast cancer detection tool called MIA produced a very high recall rate before being calibrated with local data.1

In addition to the factors relating to the local population, AI tools might be very sensitive to changes in the clinical environment. Researchers found that with MIA, the performance of the AI algorithm changed when there was a software update to the x-ray machine that was taking the images.1 Though other possible reasons for the change could not be ruled out, the finding suggests that retaining AI performance in changing clinical settings remains a challenge for the current technology.

Many of the challenges with adopting AI in healthcare settings relate to infrastructure, rather than the AI technology itself. AI tools can only be used to their full potential if all areas have the required physical and IT infrastructure (such as internet connection, computing power and secure processing systems) in place. (Scottish Government personal correspondence)

If AI systems become more widely used, questions about coordination across NHS boards may arise. Successful adoption of new technology tends to require buy-in from local users: for example, researchers have suggested that one reason behind the failure of the UK's National Programme for IT was inadequate end user engagement.3

However, some people working in the area suggest that a decentralised approach could also lead to problems. Currently, NHS Boards have varying levels of experience on using AI. Different levels of AI adoption could increase health inequalities between areas, and adopting different systems might make data sharing and patient pathways complicated. Differing levels of expertise and lack of knowledge-sharing could mean that NHS boards duplicate effort or commit to the wrong AI solution.

However, these challenges are not unique to AI. Instead, some of them were already noted by the Health and Sports Committee in 2018, as a result of an inquiry into Technology and innovation in health and social care. The inquiry considered technological innovation in general, rather than AI. The Committee's final report highlighted themes including:

The need for a national, 'Once for Scotland' approach for technology and innovation, rather than board by board decisions

The importance of data sharing and interoperability (different IT systems being able exchange and make use of information)

The need to do more to promote the uptake of new technology in NHS Scotland4

The AI and healthcare sector is characterised by rapid advancements in technology, evolving regulatory frameworks, growing public and professional awareness, and increasing adoption. Therefore, there are likely to be many further challenges that arise in the future.